Generated by Bing Image Creator: Long-wide landscape view of large fall and river, flowering dandelions, photo

This post is follwong of the above post.

In this post, I will to classification practice, to see Male or Female.

First, I make a new dataframe, which has dummy variable(1 or 0 value) for Male.

Then, I make training set and test set.

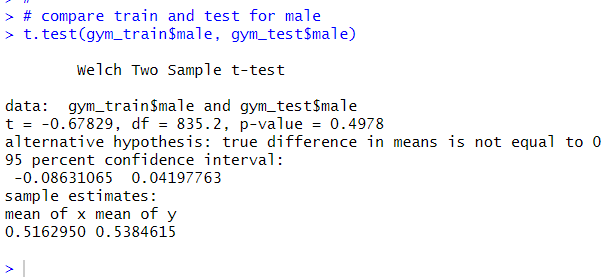

Before doing classification practice, let's check if there is significant difference between trainign set and test set for male proportion.

p-value is 0.4978, so I cannot reject gym_train and gym_test has the same male proportion.

All right, let's make a logit model.

I remove non important variables.

Lager age, height, calories, water, bmi implies Male.

Let's predict.

Let's make a confusion matrix using caret package to see how well the logit model predict.

The logit model predict correctly at 97.44% accuracy.

Among 390 observations, only 10 mistaked.

Next, let's make a decision tree model.

Find the best CP.

Prune the tree model with best CP

Draw a decision tree graph.

The decision tree uses water, weight, height, fatpct and hours.

Prediction with test set.

Let's see confusion matrix.

Accuracy is 96.92%.

That's it. Thank you!

To read from the first post,

This post code is below.

#

# make dummy variable for Male

gym_gender <- gym_raw |>

mutate(male = if_else(gender == "Male", 1, 0)) |>

select(-gender)

glimpse(gym_gender)

#

# divide training set and test set

gym_train <- gym_gender[divide_index, ]

gym_test <- gym_gender[-divide_index, ]

#

# compare train and test for male

t.test(gym_train$male, gym_test$male)

#

# Logit model

logit_mod <- glm(male ~ ., data = gym_train,

family = binomial)

summary(logit_mod)

#

# remove non important variable

logit_mod <- step(logit_mod, trace = FALSE)

summary(logit_mod)

#

# predict

logit_pred <- predict(logit_mod, newdata = gym_test,

type = "response")

logit_pred <- if_else(logit_pred > 0.5, 1, 0)

#

# Confusion Matrix

caret::confusionMatrix(factor(logit_pred),

factor(gym_test$male))

#

# make decision tree

set.seed(333)

dec_tree <- rpart(male ~ .,

data = gym_train,

method = "class",

minsplit = 3,

cp = 1e-8)

#

# best CP

min_row <- which.min(dec_tree$cptable[ , "xerror"])

best_CP <- dec_tree$cptable[min_row, "CP"]

best_CP

#

# prune the tree

dec_tree <- prune(dec_tree, cp = best_CP)

#

# Decision Tree Graph

rpart.plot(dec_tree,

type = 1)

#

# prediction

tree_pred <- predict(dec_tree, newdata = gym_test,

type = "class")

#

# Confusion Matrix

caret::confusionMatrix(factor(tree_pred),

factor(gym_test$male))

#